|

4.6.1 Word Graph Generation

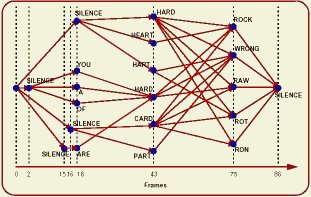

A word graph, or lattice as it is sometimes referred to, is a point-to-point description of the search through the language model. The generated word graph can be applied in a second pass decoding, usually referred to as lattice rescoring, to limit the search space. As well, it can be reused to effeciently test new acoustic models or language models in a less resource demanding manner thus making the task more computer feasible. Again, we will start by decoding a single utterance. We will use the same utterance from the previous sections as well as the parameter file from Section 4.2.3. Instead of outputting one hypothesis and score or N-Best, we will instead create a lattice of all the possibilities. First, we have to add to our parameter file (params_decode.sof) the Word Graph Generation option. We will do this by changing the "DECODE" option of "Algorithm" to "GRAPH_GENERATION".

@ Sof v1.0 @

@ HiddenMarkovModel 0 @

algorithm = "GRAPH_GENERATION";

implementation = "VITERBI";

num_levels = 3;

output_mode = "FILE";

output_file = "hypo.out";

frontend_file = "frontend.sof";

audio_database_file = "audio.sof";

language_model_file = "lm_model_update.sof";

acoustic_model_file = "ac_model_update.sof";

Go to the directory:

Command: isip_recognize -parameter_file params_decode.sof $ISIP_TUTORIA./databases/lists/identifiers_test.sof -verbose ALL

Version: 1.16 (not released) 2002/09/25 00:20:53

loading front-end: ../../recipes/frontend.sof

loading language model: ../../models/lm_model_update.sof

loading acoustic model: ../../models/ac_model_update.sof

loading audio database: ./audio_db.sof

opening the output file: ./hypo.out

processing file 1 (st_9z59362a): ../../features/st_9z59362a.sof

ref:

|