The prototype dialog system includes taking unconstrained speech queries to obtain information

needed to navigate the Mississippi State University campus and the

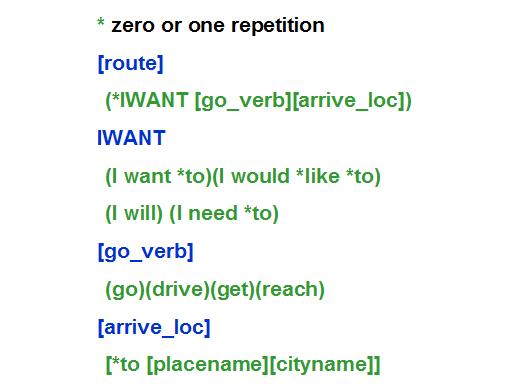

surrounding city of Starkville. The system specifically handles navigation queries of address, direction,

list of places, distance and information about the MSU campus and resolves those queries by employing

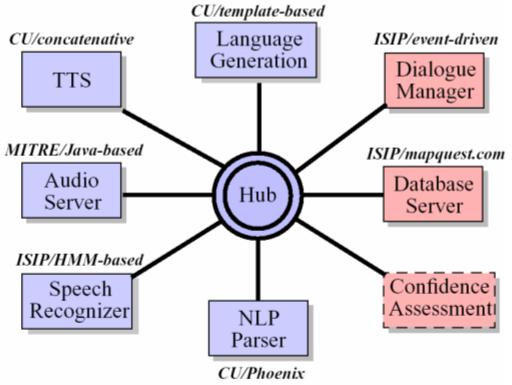

speech recognition, natural language understanding and user-centered dialog control.

The speech recognition is done using a publicly available ISIP recognition toolkit that implements a standard HMM-based (Hidden Markov Model) speaker independent continuous speech recognition system. The recognizer processes acoustic samples received from the audio server and produces the most likely word sequence that would correspond to the user's utterance. |